Introduction

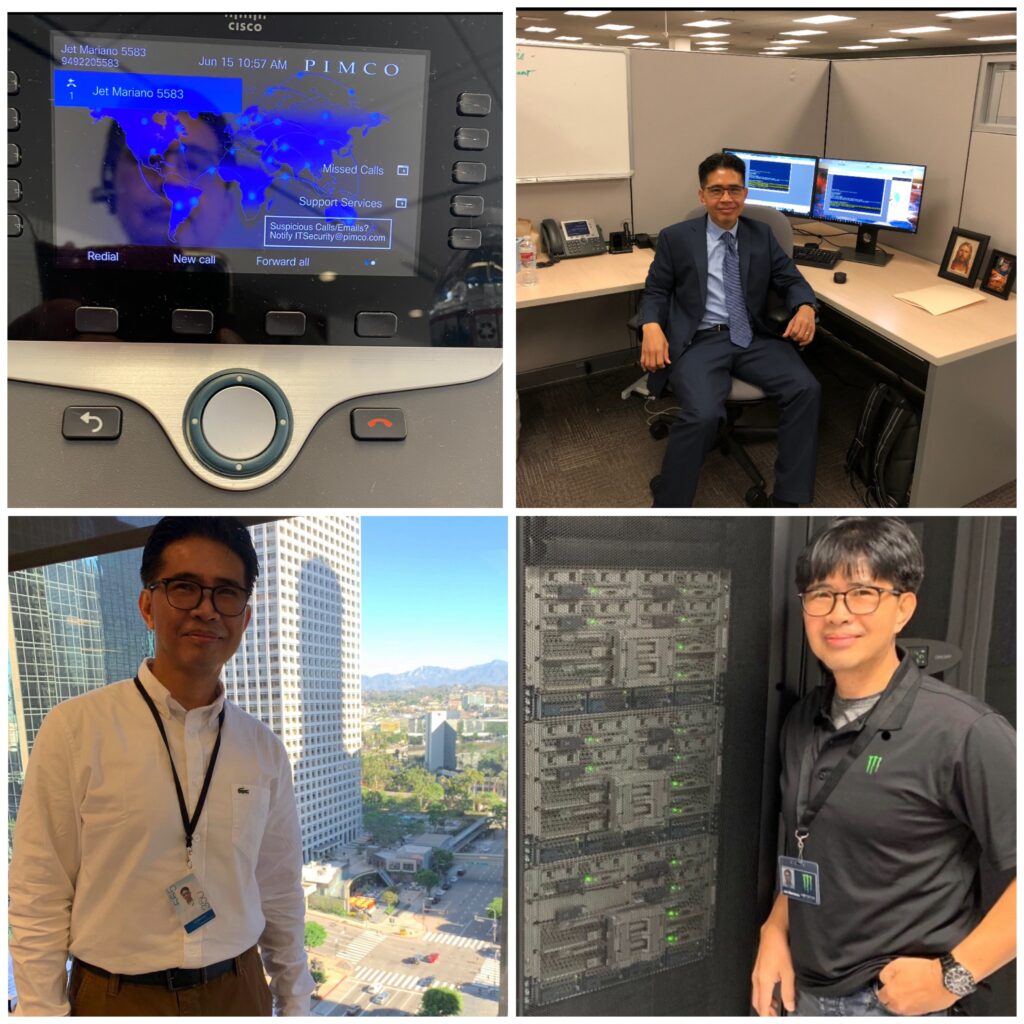

Infrastructure as Code is not optional anymore. Terraform gives you a declarative way to build, modify, and destroy cloud resources cleanly. This tutorial shows exactly how to install Terraform, create your first configuration, and connect it to Azure without affecting your company’s production environment. I used these steps to rebuild my own skills after leaving California and stepping into Utah’s quiet season of learning.

Step 1

Install Terraform using Winget

- Open PowerShell as admin

- Run the installer

winget install HashiCorp.Terraform –source winget - Restart your PowerShell window

- Verify the installation

terraform -version

You should see something like

Terraform v1.14.0

Step 2

Create your Terraform workspace

- Create a folder

mkdir C:\terraform\test1 - Go inside the folder

cd C:\terraform\test1 - Create a new file

New-Item main.tf -ItemType File

Leave the file empty for now. Terraform just needs to see that a configuration file exists.

Step 3

Write your first Terraform configuration

Open main.tf and paste this:

provider “azurerm” {

features {}

}

Nothing created yet. This is read only.

The goal is to connect Terraform to Azure safely.

Save the file.

Step 4

Initialize Terraform

Run

terraform init

This downloads the AzureRM provider and sets up your working directory.

You should see

Terraform has been successfully initialized

Step 5

Install the Azure CLI

Terraform connects to Azure using your Azure CLI login. Install it with:

winget install Microsoft.AzureCLI

Verify it

az –version

Step 6

Log into Azure

Run

az login

A browser opens. Select your Azure account.

Important note

If you see Martin’s Azure subscription, stop here and do not run terraform apply.

Terraform plan is safe because it does not make changes.

Step 7

Check your Azure subscription

az account show

This confirms who you are logged in as and which subscription Terraform will use.

Step 8

Run your first Terraform plan

terraform plan

This reads your main.tf and checks for any required changes.

Since your config is empty, the output will say:

No changes. Infrastructure is up to date.

Step 9

Useful Azure CLI commands for Cloud Engineers

Check all resource groups

az group list -o table

Check all VMs

az vm list -o table

Check storage accounts

az storage account list -o table

Check virtual networks

az network vnet list -o table

Check VM status

az vm get-instance-view –name VMNAME –resource-group RGNAME –query instanceView.statuses[1].displayStatus

Check Azure AD users

az ad user list –filter “accountEnabled eq true” -o table

Check your role assignments

az role assignment list –assignee <your UPN> -o table

These commands show LC that you are comfortable with both Terraform and Azure CLI.

Step 10

Can Terraform check Defender?

Terraform itself does not “check” Defender, but you can manage Defender settings as resources.

For example:

azurerm_security_center_contact

azurerm_security_center_subscription_pricing

azurerm_security_center_assessment

azurerm_defender_server

Meaning

Terraform is for configuration

Azure CLI is for inspection

Graph / PowerShell is for deep security reporting

If LC wants real Defender reporting, we use:

Connect-MgGraph

Get-MgSecurityAlert

Get-MgSecuritySecureScore

You already know these.

Step 11

Cleaning up safely

Since we did not deploy anything, no cleanup is required.

If you later create real resources, destroy them with

terraform destroy

Final thoughts

Terraform is one of the most powerful tools in cloud engineering. Once you know how to initialize it, authenticate with Azure, and run plans, you are already ahead of many engineers who feel overwhelmed by IaC. LC will immediately see that you are not just an Exchange guy or a VMware guy. You are becoming a modern DevOps cloud engineer who can manage infrastructure in code.

© 2012–2025 Jet Mariano. All rights reserved.

For usage terms, please see the Legal Disclaimer.