Introduction: The Path Is the Practice

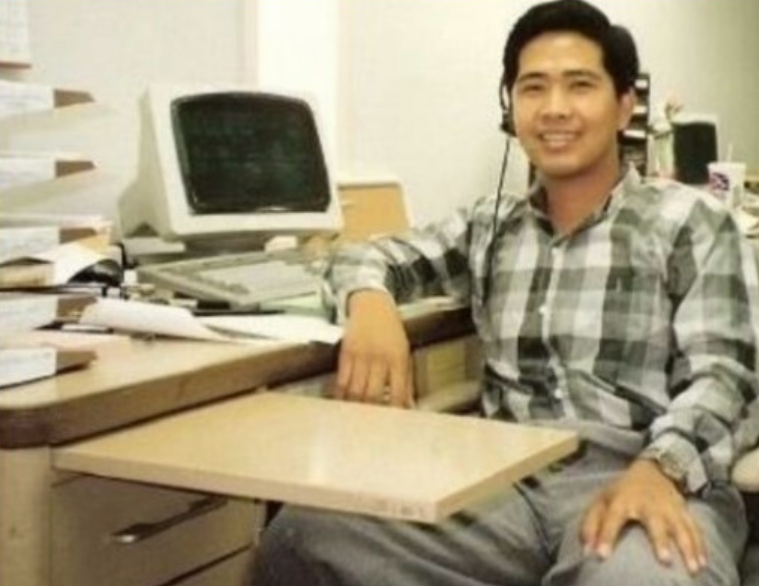

My story didn’t begin with servers or certifications.

It began at All Electronics Corporation in Van Nuys, California, where I worked full-time from 6:30 A.M. to 3:00 P.M., taking two Metro buses and walking a block from the station — rain or shine — from December 1990 to late 1995.

I woke as early as 4 A.M. to catch the first bus at Western and 3rd Street in Los Angeles, sometimes heading straight to my evening shift at the Taco Bell drive-thru in Glendale.

Those were humble, exhausting days that taught me discipline and grit — lessons that would shape every part of my career.

At All Electronics, I became fascinated by the IC — Integrated Circuit, the heart of every desktop computer. I wanted to understand it, not just sell it.

Back in my Koreatown apartment, I turned curiosity into calling.

No Google. No YouTube. No AI.

Just library books and endless nights of self-study. I intentionally crashed my computers and rebuilt them until every fix became muscle memory.

Once confident, I started offering free repairs and computer lessons to friends, relatives, and senior citizens — setting up printers, fixing networks, and teaching email basics. Those acts of service opened the door to my first full-time IT job at the University of Southern California (USC) as a PC Specialist.

I still remember waiting at the bus stop in the dark, dreaming of the day I wouldn’t have to ride in the rain. Years later, those same dreams became reality — not through luck, but through faith, discipline, dedication, and gratitude.

The rides changed — from buses to a BMW, an Audi, and now a Tesla — but what never changed was the purpose: to keep moving forward while staying grounded in gratitude.

Season of Refinement

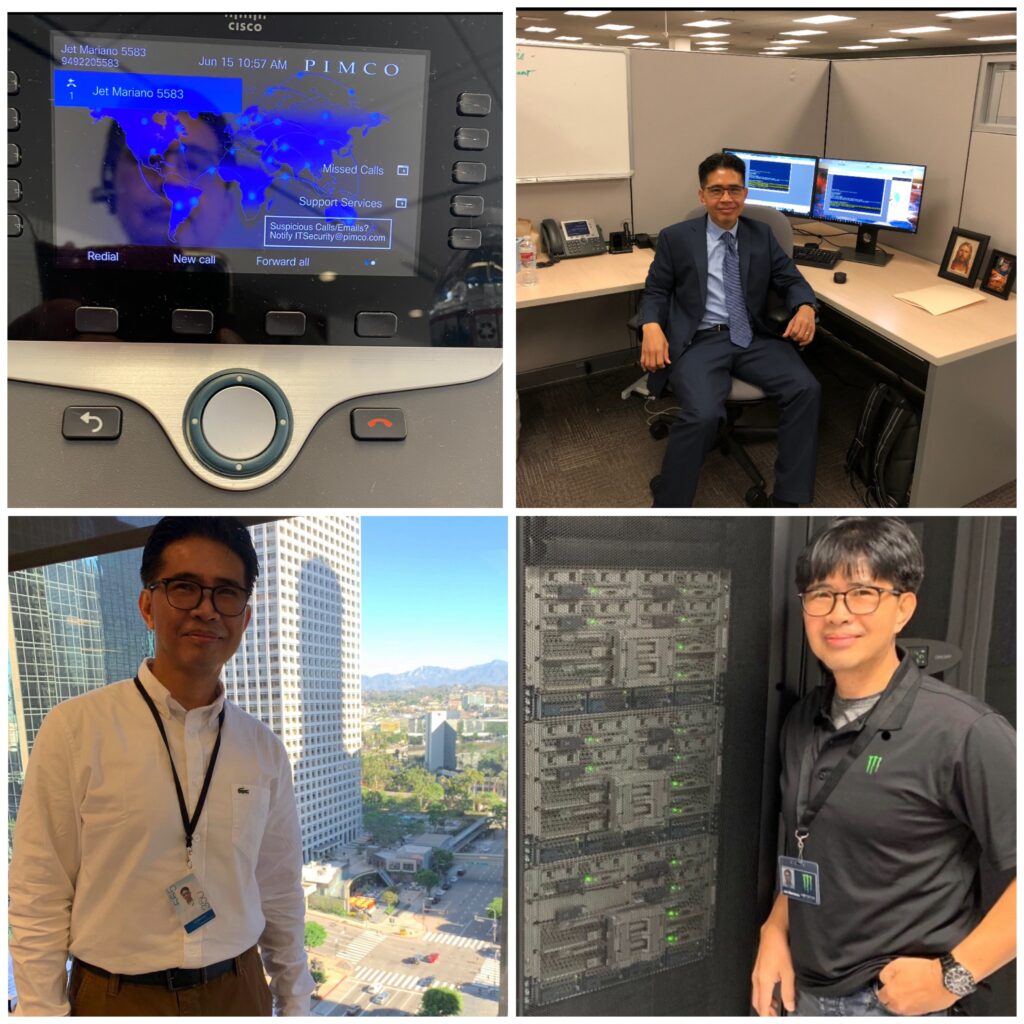

While working full-time at USC, I entered what I call my season of refinement.

By day I supported campus systems and users; by night I was a full-time student at Los Angeles City College (LACC) and a weekend warrior at DeVry University, studying Management in Telecommunications.

It was during this time that Microsoft introduced the MCSE (Microsoft Certified Systems Engineer) program.

One of my professors at LACC encouraged me to earn it, saying, “Once you have that license, companies will chase you.”

He was right — that MCSE became my ticket to GTE (now Verizon), my first step into enterprise-scale IT.

My tenure at GTE was brief because Aerospace came calling with a six-figure offer just before Y2K — an opportunity too good to refuse.

After Aerospace, I founded my own consulting firm — Ahead InfoTech (AIT) — and entered what I now call my twelve years of plenty.

One of my earliest major clients, USC Perinatal Group, asked me to design and implement a secure LAN/WAN connecting satellite offices across major hospitals including California Hospital Medical Center, Saint Joseph of Burbank and Mission Hills, and Hollywood Presbyterian Hospital.

We used T1 lines with CSU/DSU units and Fortinet firewalls; I supplied every workstation and server under my own AIT brand.

Through that success I was referred to additional projects for Tarzana and San Gabriel Perinatal Groups, linked by dedicated frame-relay circuits — early-era networking at its finest.

Momentum led to new partnerships with The Claremont Colleges and the City of West Covina, where I served as Senior Consultant handling forensic analysis and SMTP/email engineering.

Word spread. One attorney client introduced me to an opportunity in American Samoa to help design and build a regional ISP, and later to a contract with Sanyo Philippines.

During this period Fortinet was still new, and I became one of its early resellers.

Refusing to rely on mass-produced systems, I built AIT servers and workstations from the ground up for every environment.

DSL was just emerging, yet most clients still relied on dedicated T1s — real hands-on networking that demanded precision and patience.

Those were the twelve years of plenty — projects that stretched from local hospitals to overseas data links, from LAN cables to international circuits.

By the time AWS arrived in 2006 and Azure followed in 2010, I had already been building and managing distributed networks for years.

When I returned to Corporate America, my first full-time role was at Payforward, where I led the On-Prem to AWS migration, designing multi-region environments across US-East (1a and 1b) and US-West, complete with VPCs, subnets, IAM policies, and full cloud security.

That’s when I earned my AWS certifications, completing a journey that had begun with physical servers and matured in the cloud.

Education, experience, and certification merged into one lesson:

Discipline comes first. Validation follows.

Degrees and credentials were never my starting line — they were the icing on the cake of years of practice, service, and faith.

My Philosophy: One Discipline, Many Forms

Whether in Martial Arts, IT, or Photography, mastery comes from repetition, humility, and curiosity.

As Ansel Adams wrote:

“When words become unclear, I shall focus with photographs. When images become inadequate, I shall be content with silence.”

Everyone can take a photo; not everyone captures a masterpiece.

Everyone can study tech; not everyone understands its rhythm.

Excellence lives in awareness — the moment when curiosity meets purpose.

The Infrastructure Engineer Path

1️⃣ Foundations

Learn the essentials: Windows Server, Active Directory, DNS/DHCP, GPOs, Networking (VLANs, VPNs), Linux basics, and PowerShell.

Free Resources:

2️⃣ Cloud Platforms

Start with AZ-104 Azure Administrator.

Use free tiers to lab: Azure | AWS | GCP.

Courses:

3️⃣ Automation & DevOps

Learn IaC (Terraform/Bicep), Docker, Kubernetes, and CI/CD.

Watch TechWorld with Nana.

4️⃣ Labs & Simulators

No hardware? Try:

5️⃣ Portfolio

Document every lab, build diagrams, post scripts on GitHub, and write short lessons learned.

Final Reflection

From bus stops to boardrooms, from fixing desktops to deploying clouds — the principles never changed: serve first, learn always, and build things that last.

This blog will continue to evolve as technology changes — come back often and grow with it.

🪶 Closing Note

I share this story not to boast, but to inspire those still discovering their own path in technology.

Everything here is told from personal experience and memory; if a date or detail differs from official records, it’s unintentional.

I’m grateful for mentors like my LACC professor, who once told me to look up a name not yet famous — Bill Gates — and earn my MCSE + I.

He was right: that single decision opened countless doors.

I don’t claim to know everything; I simply kept learning, serving, and sharing.

My living witnesses are my son, my younger brother, and friends who once worked with me and now thrive in IT.

After all these years, I’m still standing — doing what I love most: helping people through Information Technology.

⚖️ Legal Disclaimer

All events and company names mentioned are described from personal recollection for educational and inspirational purposes only. Any factual inaccuracies are unintentional. Opinions expressed are my own and do not represent any past or current employer.

© 2012–2025 Jet Mariano. All rights reserved.

For usage terms, please see the Legal Disclaimer.